What are embeddings in machine learning?

Every now and then, you need embeddings when training machine learning models. But what exactly is such an embedding and why do we use it?

Basically, an embedding is used when we want to map some representation into another dimensional space. Doesn’t make things much clearer, does it?

So, let’s consider an example: we want to train a recommender system on a movie database (typical Netflix use case). We have many movies and information about the ratings of users given to movies.

Bash: Keep Script Running - Restart on Crash

When you are prototyping and developing small scripts that you keep running, it might be annoying that they quit when an error occurs. If you want very basic robustness against these crashes, you can at least use a bash script to automatically restart your script on error.

The tool to use here is called until and makes this a breeze.

Let’s use a dumb example Python script called test.py:

import time while True: print('Looping') time.

Writing command-line tools in Python: argument parsing

Python is a great language to build command-line tools in as it’s very expressive and concise.

You want to have the ability to parse arguments in your scripts as you don’t want to hard-code the relevant variable values to make the tool useful.

So how do we go about this in Python? It’s easily done using the argparse module.

With argparse, you define the different arguments which you expect, their default values and their shortcuts to call them.

Better unit tests in Django using Mommy

Unit testing your models in Django As a good developer, you write unit tests, of course. You will probably even write your tests before implementing your logic in a test-driven approach!

However, when developing complex models which have interactions and foreign keys, writing tests can get messy and complicated.

Say you want to test a model which has many dependencies to other models via foreign keys. To create an instance of your model, you first need to create all the other model instances which your model uses and that can be pretty tiring.

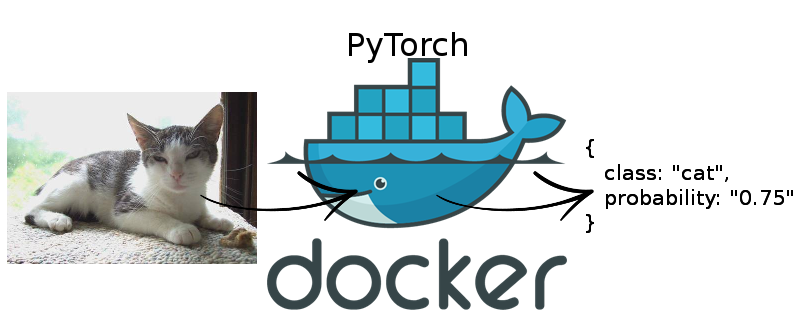

PyTorch GPU inference with Docker and Flask

GPU inference In a previous article, I illustrated how to serve a PyTorch model in a serverless manner on AWS lambda. However, currently AWS lambda and other serverless compute functions usually run on the CPU. But what if you need to serve your machine learning model on the GPU during your inference and the CPU just doesn’t cut it?

In this article, I will show you how to use Docker to serve your PyTorch model for GPU inference and also provide it as a REST API.