PyTorch GPU inference with Docker and Flask

GPU inference In a previous article, I illustrated how to serve a PyTorch model in a serverless manner on AWS lambda. However, currently AWS lambda and other serverless compute functions usually run on the CPU. But what if you need to serve your machine learning model on the GPU during your inference and the CPU just doesn’t cut it?

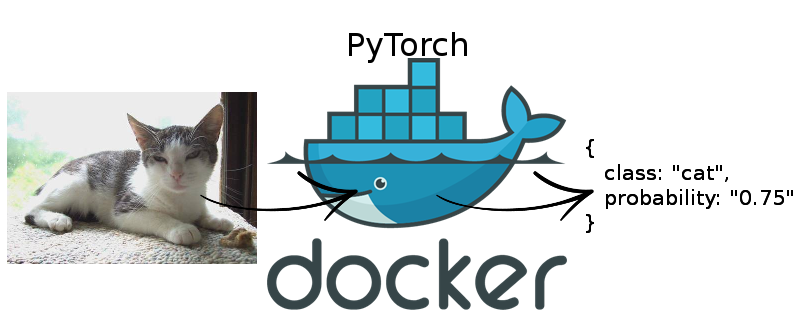

In this article, I will show you how to use Docker to serve your PyTorch model for GPU inference and also provide it as a REST API.